Hill climbing

In numerical analysis, hill climbing is a mathematical optimization technique which belongs to the family of local search. It is an iterative algorithm that starts with an arbitrary solution to a problem, then attempts to find a better solution by making an incremental change to the solution. If the change produces a better solution, another incremental change is made to the new solution, and so on until no further improvements can be found.

Note : Gradient descent is a first-order iterative optimization algorithm for finding the minimum of a function. To find a local minimum of a function using gradient descent, one takes steps proportional to the negative of the gradient (or approximate gradient) of the function at the current point. If instead one takes steps proportional to the positive of the gradient, one approaches a local maximum of that function; the procedure is then known as gradient ascent.

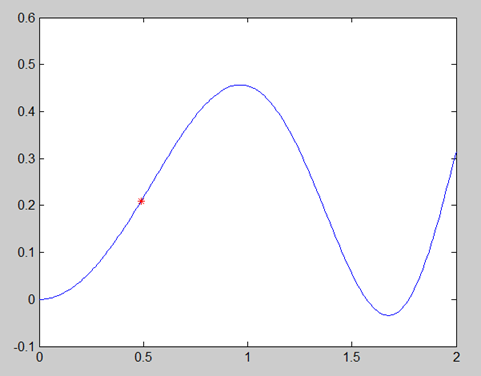

Starting position with our y=f(x)

In this exercise we will try to implement hill climbing over the function y=f(x), with a fixed starting point. This can be archived by taking minor steps in x direction to either left or right on axis. We also make assumption, that steps are done only with fixed step size, however its is recommended to make smaller steps, and you are converging into your local (if lucky then global) maximum or minimum.

- Initialize graph and position.

- Take a minor step to the left and right.

- Decide if step right or left was better, and if they are better than actual position.

- Take a step in best direction.

- Repeat until converge.

| Data | Value |

|---|---|

| Source | https://en.wikipedia.org/wiki/Hill_climbing |

| Code | Hill_climbing_code.zip |

| Solution code | Hill_climbing_solution_code.zip |